Selfie-satisfied to augment reality with their mugs

Although Apple had touted TrueDepth as the key hardware component of its “unlock with your face” FaceID system, very little had come to light about exactly how TrueDepth worked, or the kind of access developers would have to the device.

A decade ago TrueDepths gestation began in Israeli firm PrimeSense’s first-of-its-kind “depth camera.” Adding a Z depth value to the planar X-by-Y pixel image, every pixel carried metadata – its distance from the camera. The PrimeSense device projected a pattern of dots (in the infrared, so invisible to the human eye), reading those reflected points of light with a paired infrared camera. That scan – plus quite a bit of software post-processing – generated the depth map fast enough to allow for real-time depth mapping.

Depth mapping wasn’t unique to PrimeSense, but had always suffered from either very low resolution – like the ultrasonic rangefinders pioneered by Polaroid’s SX-70 camera – or had required incredibly expensive, LIDAR-like solutions. The combination of simple silicon plus software gave PrimeSense a 100-to-1 price advantage over its nearest competitors.

That innovation caught the eye of Microsoft, looking for a peripheral that might help Xbox 360 meet or surpass the capabilities of Sony’s EyeToy. An early example of camera-based real-time image processing and gesture recognition, EyeToy never really caught on with the console crowd, but it pointed to a future where the console controller might eventually disappear, replaced by a sensor and game-specific gestures.

PrimeSense had exactly what Microsoft needed to kick things up a notch: an inexpensive, high-quality, reasonably high-resolution depth camera, perfect for integrating the gamer’s body (or pretty much anything else in the real world) into the game.

Only Kinect and the Microsoft crowd

Microsoft licensed the tech and revealed its work on “Project Natal” at E3 in 2009. It took another year to bring the “Kinect” to market – where it was greeted with incredible hype and phenomenally lacklustre sales.

The technology worked, it was certainly interesting, but it suffered from the same chicken-and-egg problem that hamstrings most console peripherals – game developers won’t develop for a peripheral unless it has ubiquitous availability, and gamers won’t buy a peripheral unless it’s supported ubiquitously. Gored on the horns of that dilemma, Kinect never gained the forward motion it needed to succeed in the highly competitive – and highly price-sensitive – console market.

Kinect did find an interesting afterlife as a PC peripheral, after Microsoft released a set of Windows drivers for Kinect, adding depth camera capabilities to garden-variety PCs. Although always little more than a sub-market within a sub-market, Kinect for PC became a core part of the Microsoft story going forward, with glitzy demos crafted by Microsoft Research: office spaces mapped out by multiple Kinects, a work environment with pervasive depth sensing and gestural controls. It made for some great videos – but no sales.

It made no PrimeSense

Well, there was one major sale: that of PrimeSense itself. Not, as one might expect, to Microsoft. Instead, at the end of 2013, PrimeSense was gobbled up by Microsoft’s biggest frenemy – Apple.

What did Apple want with PrimeSense? The Colossus of Cupertino gave nothing away. PrimeSense went silent, even as it staffed up from 300 employees to well over 600. What was it working on? Rumours abounded, generally in connection with Apple’s widely rumoured efforts to create fabled, mythical AR “spectacles”. All AR requires some form of depth sensing – whether by camera, with Google’s Project Tango, or something closer to Kinect, as within Microsoft’s Hololens. It seemed obvious that PrimeSense would be a key element of Apple’s move into augmented reality.

So it eventually proved – but not in the way anyone had predicted. Face-scanning had already showed up on smartphones, unsuccessfully. Samsung’s own goal with a Galaxy S8 face scanner that could seemingly be fooled with a photograph made the problem seem a bit ridiculous. Could a face – so unique – be stolen so easily? Not if you focused on the contours that, like fingerprints, make faces unique. Measuring contours calls for a depth camera. Although Apple may not have had this use case in mind when purchasing PrimeSense, the marriage of depth camera and facial identification makes perfect sense, while facial identification without a depth map isn’t credible.

At last year’s iPhone launch, Apple extolled the virtues of FaceID and the TrueDepth camera that made it happen. As a technical marvel, TrueDepth scores high marks, squeezing the rough equivalent of the Kinect 1 into approximately one-thousandth the volume and one-hundredth the power budget. That’s Moore’s Law squared – and demonstrates what a motivated half-trillion dollar technology firm can achieve. That reach came with some costs – iPhone X shipped in lower numbers than expected because its TrueDepth component proved difficult to manufacture at scale, keeping yields low and iPhone X in short supply until late December.

Then I saw your face… now I’m a believer

When Brad Dwyer sat down to have a play with his new iPhone X, he knew it had all the makings of a Kinect. But could it do what the Kinect could? One of the Apple APIs gave Dwyer direct access to the depth map. “It’s noisy,” Dwyer told The Register. “You can point it at something and see depth map data, but it’s really messy. Hard to make anything out. But when you point it at a face, then it’s completely different.”

In true Apple fashion, the TrueDepth camera has been very carefully tuned to be very good at one thing – faces. “It’s hard to tell where the hardware ends and the software begins. But you can tell it’s not just using the data from the TrueDepth camera to map faces. There’s a lot more going on.”

Dwyer has a suspicion about what that might be. “I don’t think it’s an accident that Apple introduced ARKit at the same time they introduced CoreML. I think they’re tied together around the TrueDepth camera.”

It’s a classically Apple approach – careful hardware and software integration: noisy sensor data captured by the TrueDepth camera passes through CoreML, which detects the contours of the face, cancelling the noise and making that data clean and smooth – ready for a programmer.

After a weekend of learning, Dwyer sat down with his staff to brainstorm some ideas for TrueDepth apps. “In an hour we’d come up with at least 50 of them.”

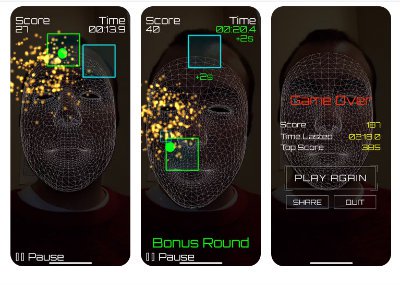

A week later Dwyer submitted his first app – Noze Zone – for Apple’s approval. Noze Zone turns your face into a controller for a simple stare-em-down-and-blow-em-up video game. A toe in the water, something to establish Dwyer’s firm as innovators in this newest of interfaces. That’s when things got complicated. “It took about a month for Apple to approve NozeZone, because of an article in the Washington Post.”

On 30 November the Bezos-owned broadsheet published an article asking pointed questions about how the facial data gathered via TrueDepth + ARKit + CoreML would be used. Would it be shared? Would it be uploaded? Would apps be able to sneak a look at your face and read your emotions? Certainly, a firm like Facebook would be very interested in that – more food for their own algorithms. In the wake of the article, Apple – which publicly prides itself on how it engineers products to protect customer privacy – developed a set of guidelines that any TrueDepth apps would need to adhere to. Or face rejection.

“Apple asked us what we were doing with the face data, where it was going, that sort of thing. When they were satisfied we were keeping all of that private, they approved Noze Zone – a month after we’d submitted the app.” Out on the cutting edge, sometimes a small developer has to wait for a behemoth to catch up with itself.

All indications point to a next-generation iPad equipped with a TrueDepth camera – a sign the technology will spread pervasively across Apple’s iOS product line. Whether these cameras remain user-facing is a bigger question. “Right now,” muses Dwyer, “ARKit can detect flat surfaces like walls and floors. With a TrueDepth camera pointed outward, ARKit would be able to detect everything within a space.”

That may happen, but given the close pairing of ARKit and CoreML required to recognise faces, it may be a big ask for a hardware/software system that identifies the shapes of everything. It’s more likely faces are a first step in a graduated approach that will rapidly grow to encompass our bodies – detect faces, then arms and torsos and legs. That’s quite a bit right there, allowing TrueDepth to realise the same expressive capabilities as the original Kinect.

What about inanimate objects? Will Apple need to teach this noisy sensor to recognise dinner plates or trees or automobiles? Here TrueDepth starts to reveal itself as the leading edge of an Apple strategy in augmented reality, building a base of hardware and software techniques that begin to nibble away at one of the biggest technical challenges facing augmented reality – recognising the world.

Dwyer is excited. “We’re spending time working on what we can build now with TrueDepth – a photorealistic mapping app very much like a high-quality version of Apple’s own animojis. But we’re looking forward to the AR spectacles.” ®